Contents

- 1 The Current State of AI in Healthcare

- 2 The Optimistic Vision: Transformative Benefits of AI in Medicine

- 3 The Pessimistic

Perspective: Risks and Concerns

- 3.1 Algorithmic Bias and Health Inequities

- 3.2 Privacy Violations and Data Security Risks

- 3.3 The Black Box Problem and Accountability

- 3.4 Safety Concerns and Medical Errors

- 3.5 Erosion of the Human Element in Healthcare

- 3.6 Regulatory Gaps and Governance Challenges

- 3.7 Economic Disruption and Job Displacement

- 4 Finding Balance: A Path Forward

- 5 Conclusion: Embracing Complexity

AI-powered medical technology is transforming the future of healthcare delivery

The intersection of artificial intelligence and healthcare represents

one of the most transformative developments in modern medicine. As we

navigate through 2025, AI technologies are no longer experimental

curiosities confined to research laboratories—they have become integral

components of healthcare systems worldwide, fundamentally reshaping how

we diagnose diseases, treat patients, and manage health outcomes. This

revolution brings with it both extraordinary promise and significant

challenges that demand our careful attention.

The Current State of AI

in Healthcare

The healthcare industry has witnessed an unprecedented surge in AI

adoption over recent years. By August 2024, the U.S. Food and Drug

Administration had authorized approximately 950 medical devices

incorporating artificial intelligence or machine learning capabilities,

with the majority focused on disease detection and diagnosis,

particularly in radiology. This explosive growth reflects a broader

trend: AI-related healthcare publications have skyrocketed from just 158

in 2014 to 731 in 2024, demonstrating the field’s rapid maturation.

Today’s AI applications in healthcare span an impressive range of

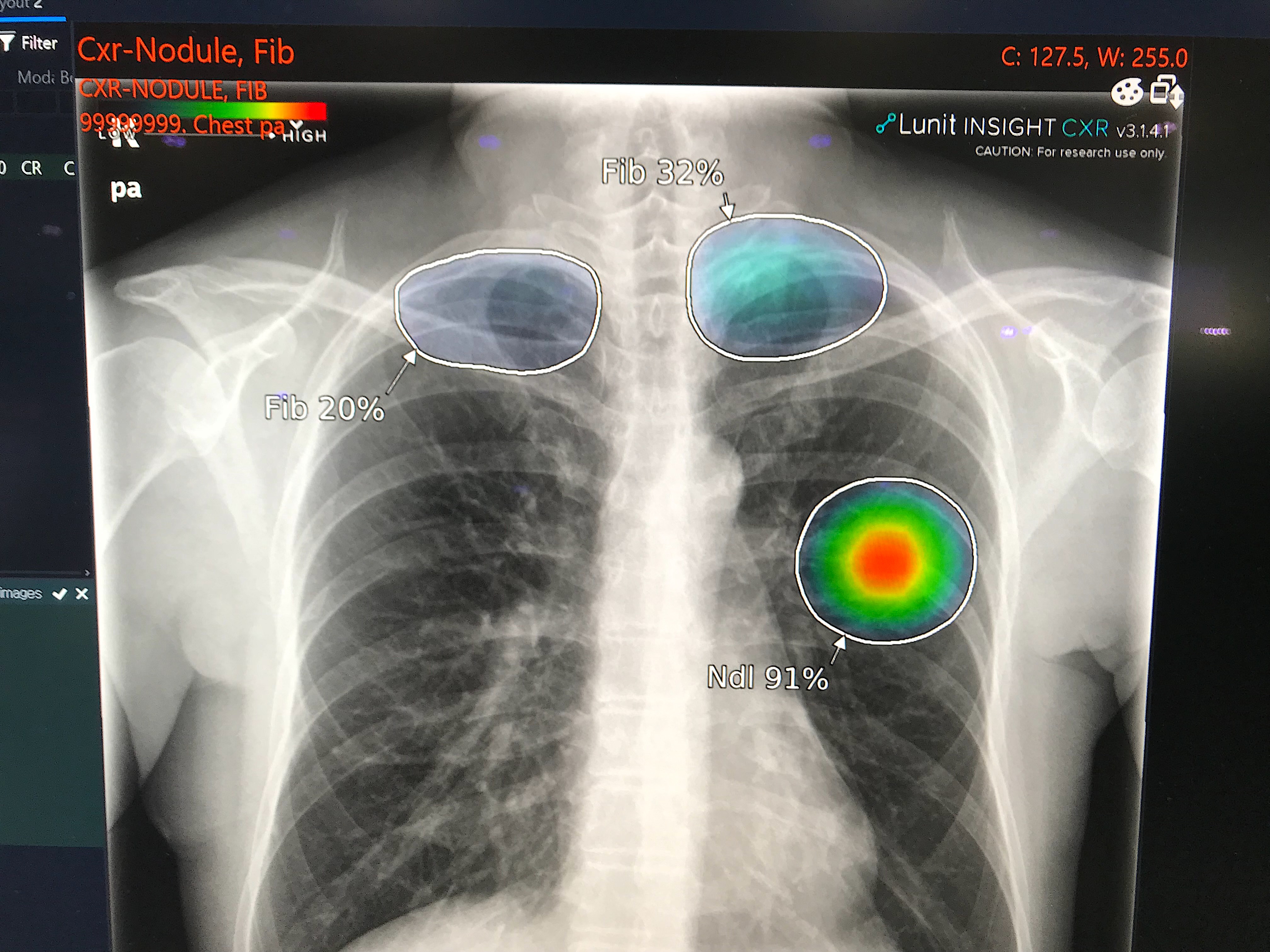

capabilities. Machine learning algorithms are achieving remarkable

accuracy in medical imaging, with some systems detecting lung nodules

with 94% accuracy and identifying breast cancer with 90%

sensitivity—often matching or surpassing human radiologists. These

systems leverage sophisticated deep learning architectures, particularly

convolutional neural networks (CNNs), to analyze medical images and

identify patterns that might escape human observation.

diagnostic accuracy across multiple medical specialties

Beyond diagnostics, AI is revolutionizing clinical workflows through

ambient listening technology that automates clinical documentation,

retrieval-augmented generation (RAG) systems that enable accurate data

querying, and intelligent clinical coding that reduces administrative

burdens. Virtual health assistants powered by AI are handling patient

triage, answering routine inquiries, and managing appointment

scheduling, freeing healthcare professionals to focus on complex medical

decision-making.

The technology has also made significant inroads in drug discovery,

where AI accelerates the identification of new therapeutic targets and

optimizes clinical trial design. Predictive analytics platforms are

analyzing electronic health records to anticipate disease progression,

identify patients at risk for complications, and recommend personalized

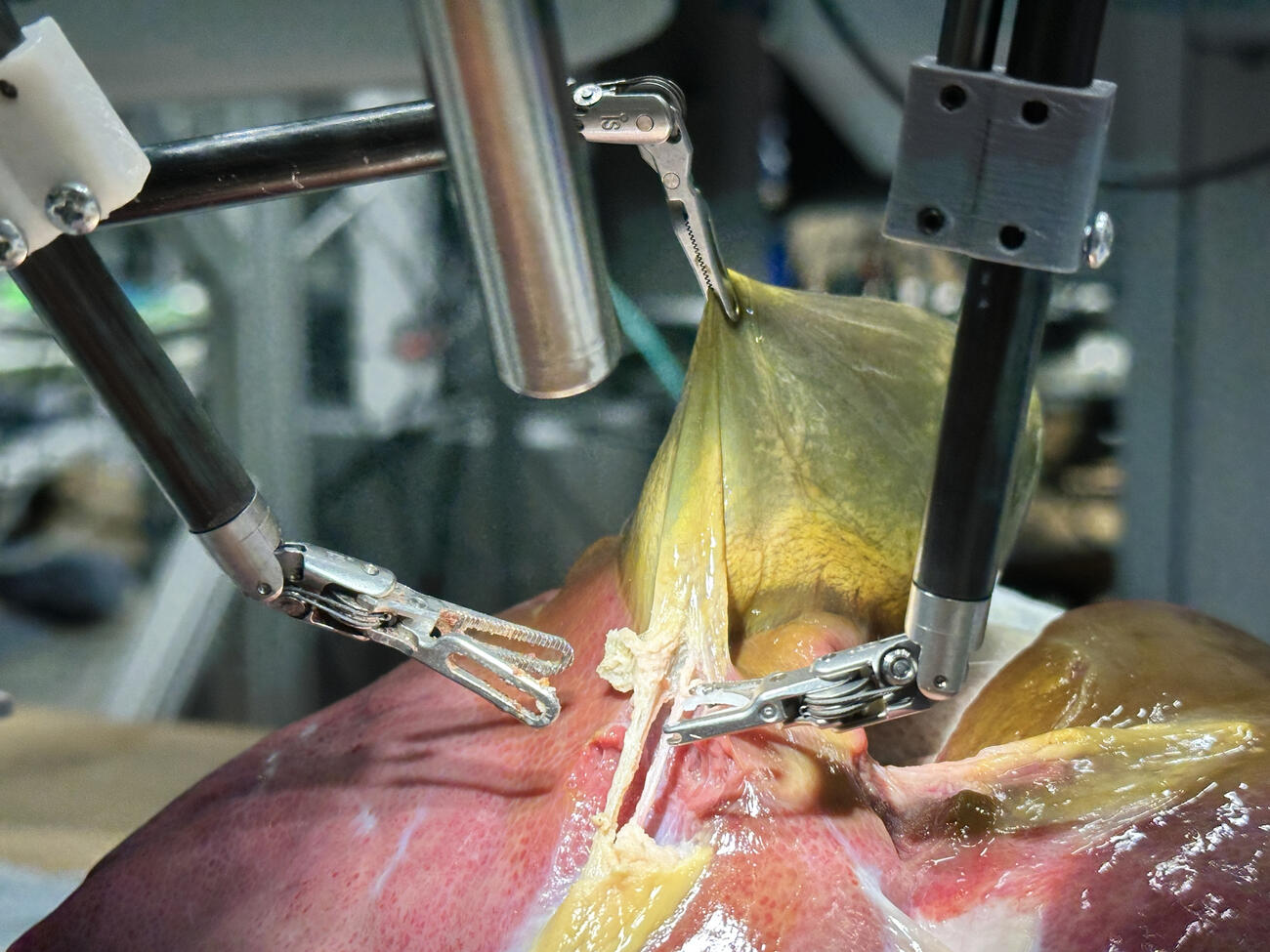

treatment strategies. In surgical settings, AI-assisted robotic systems

are enabling unprecedented precision in complex procedures, from

neurosurgery to dental operations.

The

Optimistic Vision: Transformative Benefits of AI in Medicine

The potential benefits of AI in healthcare are nothing short of

revolutionary, promising to address some of medicine’s most persistent

challenges while opening new frontiers in patient care.

Enhanced

Diagnostic Accuracy and Early Detection

Perhaps AI’s most celebrated contribution lies in its diagnostic

capabilities. AI systems can process vast amounts of medical data—from

imaging studies to genomic sequences to clinical histories—identifying

subtle patterns and correlations that might elude even experienced

clinicians. This capability is particularly valuable in early disease

detection, where AI algorithms can spot precancerous lesions, identify

cardiovascular risks from retinal scans, and detect diabetic retinopathy

before symptoms appear.

Recent breakthroughs include AI models that can predict genetic risks

for hereditary diseases, analyze pathology slides for cancer detection

with remarkable precision, and even forecast individual disease risks

for over 1,000 conditions—akin to weather forecasting for health. These

predictive capabilities enable proactive interventions that can prevent

disease progression or catch conditions at their most treatable

stages.

Personalized and Precision

Medicine

AI is ushering in an era of truly personalized healthcare. By

integrating multimodal data—genomic information, medical imaging,

clinical records, lifestyle factors, and real-time monitoring from

wearable devices—AI systems can tailor treatment plans to individual

patients with unprecedented specificity. This approach optimizes drug

selection and dosing, predicts treatment responses, and identifies the

most effective interventions based on a patient’s unique biological and

clinical profile.

enabling precision in complex surgical procedures

In oncology, AI analyzes tumor characteristics to recommend targeted

therapies. In cardiology, it predicts which patients will benefit most

from specific interventions. In chronic disease management, AI-powered

platforms continuously monitor patient data, adjusting treatment

recommendations in real-time to optimize outcomes while minimizing side

effects.

Operational

Efficiency and Cost Reduction

The economic impact of AI in healthcare extends far beyond improved

clinical outcomes. By automating routine tasks—from documentation and

billing to lab result interpretation and appointment scheduling—AI

dramatically reduces administrative overhead that currently consumes up

to 30% of healthcare spending in many systems. This efficiency

translates directly into cost savings that can make healthcare more

accessible and affordable.

AI-driven workflow optimization helps hospitals manage patient

throughput more effectively, reducing wait times and improving resource

allocation. Predictive analytics identify patients at high risk for

readmission, enabling targeted interventions that prevent costly

emergency department visits. In clinical trials, AI accelerates patient

recruitment and monitors safety signals, potentially reducing drug

development costs by billions of dollars.

Democratizing Healthcare

Access

Perhaps most profoundly, AI has the potential to democratize access

to high-quality healthcare. Telemedicine platforms enhanced with AI

diagnostic tools can bring specialist-level expertise to remote and

underserved communities. AI-powered triage systems help patients

navigate complex healthcare systems, directing them to appropriate care

levels and reducing unnecessary emergency department visits.

Virtual health assistants provide 24/7 support for medication

management, symptom checking, and health education, empowering patients

to take active roles in their care. For healthcare systems struggling

with physician shortages, AI augments human capabilities, enabling

smaller teams to serve larger populations without compromising care

quality.

Advancing Medical

Research and Innovation

AI is accelerating the pace of medical discovery in ways previously

unimaginable. Machine learning algorithms can analyze millions of

research papers, clinical trials, and patient records to identify

promising research directions and generate new hypotheses. In drug

discovery, AI screens billions of molecular compounds to identify

potential therapeutics, a process that once took years but now occurs in

months or weeks.

The technology is also revealing new insights into disease mechanisms

by identifying patterns in complex biological data that human

researchers might never detect. These discoveries are opening new

avenues for treatment and prevention, potentially leading to

breakthroughs in conditions that have long resisted medical

intervention.

The Pessimistic

Perspective: Risks and Concerns

While AI’s potential in healthcare is undeniable, its implementation

raises serious concerns that cannot be dismissed or minimized. These

challenges span technical, ethical, social, and regulatory domains, each

presenting obstacles that could undermine AI’s benefits or even cause

harm if not properly addressed.

important questions about privacy and security

Algorithmic Bias and

Health Inequities

One of the most troubling concerns surrounding AI in healthcare is

the potential for algorithmic bias to perpetuate or even exacerbate

existing health disparities. AI systems learn from historical data, and

if that data reflects societal biases or underrepresents certain

populations, the resulting algorithms will inherit and amplify those

biases.

Studies have documented numerous instances where AI diagnostic tools

perform poorly on underrepresented demographic groups—women, racial

minorities, and patients from lower socioeconomic backgrounds. An AI

system trained primarily on data from one population may misdiagnose

conditions in others, leading to delayed treatment or inappropriate

interventions. This risk is particularly acute in conditions that

present differently across demographic groups or in populations that

have historically received inadequate healthcare.

The consequences of biased AI extend beyond individual misdiagnoses.

If AI-driven resource allocation systems are trained on biased data,

they may systematically direct resources away from already underserved

communities, widening health equity gaps. Without careful attention to

data diversity and algorithmic fairness, AI could become a tool that

reinforces rather than reduces healthcare inequalities.

Privacy Violations

and Data Security Risks

AI’s hunger for data creates profound privacy concerns. Healthcare AI

systems require vast amounts of sensitive personal information—medical

histories, genetic data, lifestyle information, and real-time health

monitoring data. This concentration of sensitive data creates attractive

targets for cyberattacks and raises questions about who controls this

information and how it might be used.

Current privacy frameworks like GDPR and HIPAA, while important, were

designed for an earlier era and struggle to address AI-specific

challenges. Advanced algorithms can potentially re-identify supposedly

anonymized data, undermining privacy protections. The commercial

incentives surrounding health data—from pharmaceutical companies to

insurance providers to technology firms—create pressures that may not

align with patient interests.

Data breaches in healthcare can have devastating consequences beyond

financial loss. Exposed health information can lead to discrimination in

employment, insurance, and social contexts. The permanent nature of

genetic data means that breaches can affect not just individuals but

their families and future generations. As AI systems become more

sophisticated and data sharing becomes more common, these risks

multiply.

The Black Box Problem

and Accountability

Many AI systems, particularly those using deep learning, operate as

“black boxes”—their decision-making processes are opaque even to their

creators. When an AI system recommends a diagnosis or treatment,

clinicians and patients often cannot understand the reasoning behind

that recommendation. This opacity creates serious problems for medical

practice, which traditionally relies on transparent reasoning and the

ability to explain decisions to patients.

transparency and accountability in medical decision-making

The black box problem becomes particularly acute when AI makes

errors. Recent research from the National Institutes of Health found

that AI models can make mistakes in explaining medical images even when

reaching correct diagnoses, highlighting the disconnect between AI’s

outputs and its reasoning. When an AI-assisted diagnosis proves wrong,

determining accountability becomes nearly impossible. Is the AI

developer responsible? The healthcare provider who relied on the AI? The

institution that deployed the system?

This lack of transparency erodes trust—both among healthcare

providers who may be reluctant to rely on systems they don’t understand,

and among patients who may feel their care is being determined by

inscrutable algorithms. It also complicates the informed consent

process, as patients cannot truly understand the risks and benefits of

AI-assisted care when the systems themselves are opaque.

Safety Concerns and Medical

Errors

While AI promises to reduce medical errors, it also introduces new

categories of risk. AI systems can fail in unexpected ways, producing

confident but incorrect recommendations that may mislead clinicians. The

phenomenon of “automation bias”—the tendency to over-rely on automated

systems—means that healthcare providers might accept AI recommendations

without sufficient critical evaluation, particularly when workload

pressures are high.

AI systems trained on one population or in one clinical setting may

perform poorly when deployed elsewhere, a problem known as

“overfitting.” A diagnostic algorithm that works well in a

well-resourced academic medical center might fail catastrophically in a

rural clinic with different patient populations and equipment. These

failures may not be immediately apparent, potentially causing harm

before they’re detected and corrected.

The rapid pace of AI development also creates safety challenges.

Unlike traditional medical devices that undergo extensive testing before

deployment, AI systems can be updated continuously, potentially

introducing new bugs or behaviors without adequate validation. The lack

of standardized testing protocols and post-market surveillance for AI

systems means that problems may go undetected until significant harm has

occurred.

Erosion of the Human

Element in Healthcare

Healthcare is fundamentally a human endeavor, built on relationships,

empathy, and trust between patients and providers. The increasing role

of AI raises concerns about the dehumanization of medicine. When

algorithms mediate the patient-provider relationship, there’s a risk

that the compassionate, holistic aspects of care—understanding patients’

fears, values, and life circumstances—may be diminished.

Clinicians report concerns that excessive reliance on AI could lead

to deskilling, where healthcare providers lose the ability to make

independent clinical judgments. Medical students trained in an

AI-saturated environment might never develop the pattern recognition and

intuitive reasoning that comes from extensive clinical experience. This

dependency could prove catastrophic if AI systems fail or are

unavailable.

For patients, particularly those dealing with serious illness or

end-of-life decisions, the presence of AI in medical decision-making can

feel alienating. The knowledge that an algorithm is influencing their

care may undermine the therapeutic relationship and reduce patient

agency in their own healthcare decisions.

Regulatory Gaps and

Governance Challenges

The regulatory landscape for AI in healthcare is fragmented and

struggling to keep pace with technological advancement. Different

jurisdictions have different standards, creating confusion and

potentially allowing unsafe systems to reach patients. Many AI systems,

particularly those developed in-house by healthcare institutions, may

evade regulatory oversight entirely.

Current regulations often fail to address AI-specific challenges like

algorithmic bias, continuous learning systems that change over time, and

the use of AI in clinical decision support versus autonomous

decision-making. The lack of clear liability frameworks means that when

AI-related harm occurs, victims may have no clear path to compensation

or justice.

International coordination on AI governance remains weak, despite the

global nature of both AI development and healthcare challenges. Without

harmonized standards and robust oversight mechanisms, the risks of AI in

healthcare may outweigh its benefits, particularly for vulnerable

populations.

Economic Disruption

and Job Displacement

The efficiency gains from AI come with a human cost. Automation of

administrative tasks, diagnostic procedures, and even some aspects of

clinical care threatens jobs across the healthcare sector. Radiologists,

pathologists, medical coders, and administrative staff face potential

displacement as AI systems take over tasks they once performed.

While proponents argue that AI will augment rather than replace

healthcare workers, the reality may be more complex. Economic pressures

on healthcare systems create incentives to reduce labor costs, and AI

provides a means to do so. The resulting job losses could be

particularly severe in already economically disadvantaged communities,

exacerbating social inequalities.

Moreover, the concentration of AI development in wealthy nations and

large technology companies raises concerns about global health equity.

If AI-driven healthcare becomes the standard in high-income countries

while remaining inaccessible elsewhere, the global health divide could

widen dramatically.

Finding Balance: A Path

Forward

The future of AI in healthcare need not be a choice between

uncritical enthusiasm and paralyzing fear. Instead, we must chart a

middle course that maximizes benefits while actively mitigating risks

through thoughtful governance, ethical frameworks, and ongoing

vigilance.

Establishing Robust

Ethical Frameworks

Healthcare AI must be developed and deployed within clear ethical

frameworks that prioritize patient welfare, equity, and human dignity.

These frameworks should mandate transparency in AI decision-making,

require diverse representation in training data, and establish clear

lines of accountability when systems fail. Ethical review boards should

evaluate AI systems before deployment, with ongoing monitoring to detect

emerging problems.

Informed consent processes must evolve to address AI-specific

considerations, ensuring patients understand when and how AI influences

their care and preserving their right to opt out of AI-assisted

treatment. Healthcare providers need training not just in using AI tools

but in critically evaluating their recommendations and maintaining their

independent clinical judgment.

Strengthening Regulatory

Oversight

Regulatory frameworks must adapt to AI’s unique challenges. This

requires international coordination to establish harmonized standards,

mandatory post-market surveillance to detect problems after deployment,

and clear liability rules that protect patients while encouraging

responsible innovation. Regulations should be adaptive, evolving as AI

technology advances, rather than static rules that quickly become

obsolete.

Special attention must be paid to AI systems that operate

autonomously or make high-stakes decisions. These should face more

stringent oversight than AI tools that merely assist human

decision-makers. Regulatory agencies need adequate resources and

expertise to evaluate AI systems effectively, including the ability to

audit algorithms and training data.

Addressing Bias and

Promoting Equity

Combating algorithmic bias requires proactive measures throughout the

AI development lifecycle. Training datasets must be diverse and

representative, with explicit efforts to include underrepresented

populations. Algorithms should be regularly audited for bias, with

results made public to enable accountability. When bias is detected,

systems should be retrained or retired rather than deployed with known

limitations.

Healthcare institutions deploying AI must monitor outcomes across

demographic groups to detect disparities early. Resources should be

directed toward ensuring that AI benefits reach underserved communities

rather than widening existing gaps. This may require subsidies,

infrastructure investments, and targeted deployment strategies that

prioritize equity over profit.

Protecting Privacy and

Security

Robust data protection measures must be non-negotiable. This includes

strong encryption, strict access controls, and data minimization

principles that limit collection to what’s truly necessary. Privacy

frameworks should give patients meaningful control over their health

data, including the right to know how it’s used and the ability to

revoke consent.

Security standards for healthcare AI systems should be rigorous, with

regular audits and penetration testing. Breach notification requirements

should be strengthened, and penalties for negligent data handling should

be substantial enough to incentivize proper security practices.

International agreements on health data sharing should balance research

needs with privacy protection.

Investing in Human Capital

Rather than viewing AI as a replacement for healthcare workers, we

should invest in training that enables professionals to work effectively

alongside AI systems. Medical education should incorporate AI literacy,

teaching future clinicians how to interpret AI recommendations

critically and when to override them. Continuing education programs

should help current practitioners adapt to AI-augmented practice.

Support should be provided for workers whose roles are disrupted by

AI, including retraining programs and transition assistance. Healthcare

systems should be designed to leverage AI’s efficiency gains to improve

working conditions and reduce burnout rather than simply cutting costs

through workforce reductions.

Fostering Collaborative

Innovation

The development of healthcare AI should involve diverse

stakeholders—clinicians, patients, ethicists, regulators, and

technologists—working together from the earliest stages. Patient

advocacy groups should have meaningful input into AI development

priorities and deployment decisions. Clinical validation should be

rigorous and transparent, with results published regardless of

outcome.

Open science principles should guide AI research, with algorithms and

datasets shared when possible to enable independent validation and

improvement. Public funding should support AI development that addresses

unmet medical needs rather than just profitable applications.

International collaboration should focus on ensuring that AI benefits

global health rather than widening disparities between nations.

Conclusion: Embracing

Complexity

The future of AI in healthcare is neither utopian nor dystopian—it is

complex, contingent, and still being written. The technology offers

genuine opportunities to improve health outcomes, increase efficiency,

and extend quality care to more people. But these benefits are not

automatic or inevitable. They depend on choices we make today about how

to develop, regulate, and deploy AI systems.

We must resist both the temptation to embrace AI uncritically and the

impulse to reject it entirely. Instead, we need sustained engagement

with the technology’s complexities, honest acknowledgment of its

limitations and risks, and commitment to ensuring that it serves human

flourishing rather than narrow commercial interests.

The healthcare AI revolution will succeed or fail based not on the

sophistication of algorithms but on the wisdom of the humans who create

and govern them. By centering patient welfare, prioritizing equity,

demanding transparency, and maintaining human judgment at the heart of

medical care, we can harness AI’s transformative potential while

protecting against its risks.

The future of healthcare is being built now, in research

laboratories, hospital systems, regulatory agencies, and policy debates

around the world. The decisions we make in this pivotal moment will

shape medicine for generations to come. We must choose wisely, guided by

evidence, ethics, and an unwavering commitment to the fundamental

principle that healthcare exists to serve human health and dignity.

As we stand at this crossroads, one thing is clear: the question is

not whether AI will transform healthcare, but how—and whether that

transformation will make medicine more humane, equitable, and effective,

or less so. The answer lies in our hands.

This article explores the multifaceted impact of artificial

intelligence on healthcare, examining both the transformative potential

and significant challenges that accompany this technological revolution.

As AI continues to evolve, ongoing dialogue among all stakeholders will

be essential to ensuring that innovation serves the broader goals of

health equity and human welfare.